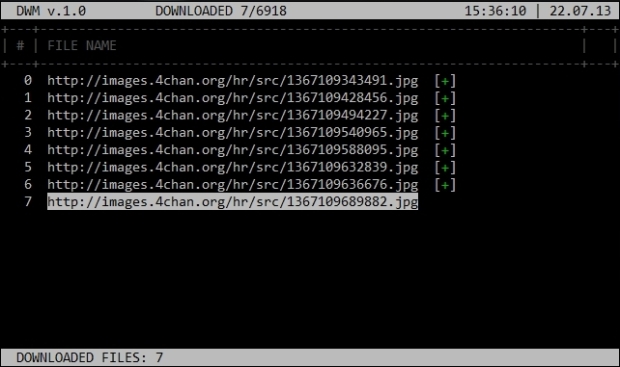

[ command line image download manager ]

Two decades ago, browsing the internet via 56kb modem was an agonizing experience when you’ve encountered the webpage rich with pictures. They had to be compressed and of course the compression was lossy.

Now you can download high-resolution pictures with a click of a button and wait only couple of seconds for them to be fully loaded. Bandwidth is not an issue anymore.

What >IS< the issue then? Where to get the really high-resolution pictures (above 1920×1080) on a specific and very narrow topic.

If you like (as do I) old medieval maps, NASA best space pictures, landscape photos or some old paintings that are hard to find in high-resolution, and you will not feel offended with an occasional nudity – then the /hr board at 4chan.com is the place just for you. In there you will find multiple collections of really amazing pictures compiled in a single threads just waiting for you to grab them. Yes – this is 4chan. Famous for being lightly moderated and for postings that are anonymous – as warned before, you might encounter some nudity but i guess this is a price for a top-notch pictures you would have otherwise never found.

The /hr board is a collection of multiple threads containing postings with pictures. While i really like some of them, I’m not a patient person when it comes to the downloading stuff manually by clicking on each and every one of them. Therefore, I’ve created a bash script that will download all the pictures for me automatically. It is fairly easy and it works in a three phases firstly it collects all the links to threads, secondly it parses those threads and isolates the links to images and finally it downloads those images to the directory specified.

While it is capable of downloading at full speed, I’ve limited the parsing of webpages to 10000Bps and downloading the images to 200kbps with curl and wget respectively.

I think it’s a matter of netiquette not to cause an overload for the 4chan servers.

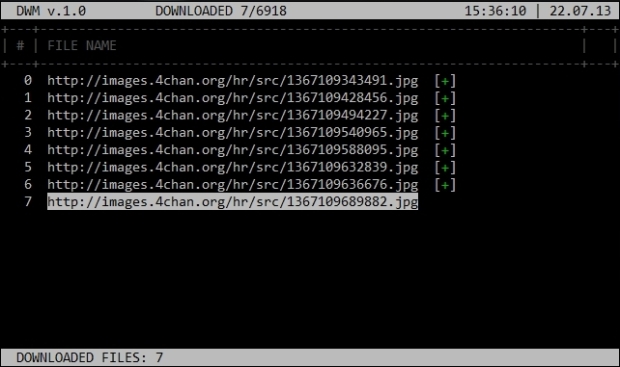

Take a peek at how it looks when executed:

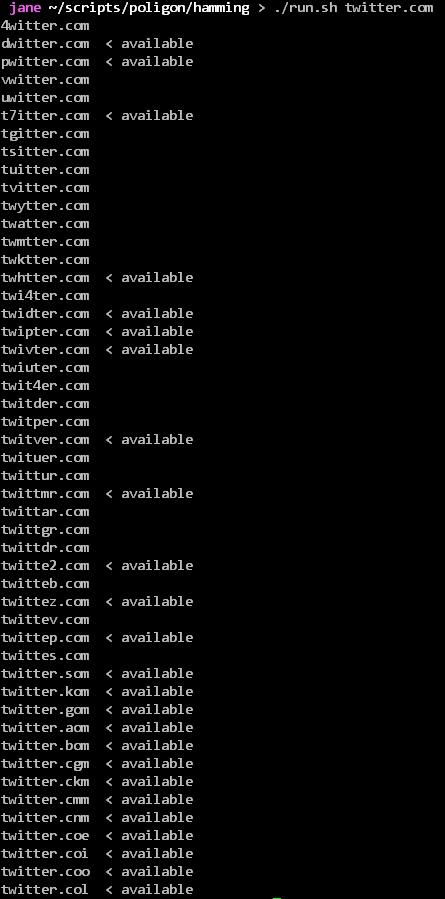

- Collecting links to sub-pages:

2. Collecting links to images:

2. Collecting links to images:

3. Downloading images

3. Downloading images

Functions’ definitions are in separate file below.

Without further ado, here it is:

dwm.sh

#!/bin/bash

source myfunctions.sh

############################

# VARIABLES #

############################

today=`date +%d.%m.%y`

time=`date +%H:%M:%S`

cols=$(tput cols)

lines=$(tput lines)

download_counter=0;

curltransfer="100000B"

margin_offset=0;

margin_text_offset=2;

let top_box_t=0;

let top_box_b=0+5;

let top_box_l=0+$margin_offset;

let top_box_r=$cols-$margin_offset;

let top_box_width=$cols-$margin_offset-$margin_offset

site="http://boards.4chan.org/hr/"

if [[ ! -d "./$today" ]]; then mkdir ./$today; fi

tput civis

clear

draw_top_box;

draw_bottom_box;

scrap_links;

cat links.4chan | sort -u >> uniquelinks.4chan

if [[ -e links.4chan ]]; then rm links.4chan; fi

cat uniquelinks.4chan | grep -v "board" | cut -d "#" -f 1 > tmp.4chan

rm uniquelinks.4chan

cat tmp.4chan | sort -u >> uniquelinks.4chan

rm tmp.4chan

scrap_images;

cat images.4chan | sort -u >> uniqueimages.4chan

rm images.4chan

draw_panel;

draw_headers;

download_images;

tput cup 20 0;

tput cnorm

And required functions file:

myfunctions.sh

#!/bin/bash

check_image()

{

echo "1:" $1

echo "2:" $2

}

draw_headers()

{

tput cup $(($top_box_t+2)) $(($top_box_l+2));

echo -en "$EINS\033[1;30m\033[40m#\033[0m";

tput cup $(($top_box_t+2)) $(($top_box_l+6));

echo -en "$EINS\033[1;30m\033[40mFILE NAME\033[0m";

}

download_images()

{

let scroll_lines=$lines-6

top=4

tput cup $top 0

scrolled_lines=1;

allfiles=`cat uniqueimages.4chan | wc -l`

index=0

for i in `cat uniqueimages.4chan`

do

filename=`echo $i | cut -d "/" -f 6`

if [[ $((index%$scroll_lines)) -eq 0 ]];

then

tput cup $top 0

for ((j=0; j<$scroll_lines; j++))

do

echo -e "$EINS\033[32m\033[40m \033[0m";

done

tput cup $top 0

fi

echo -ne "\033[s"

# if [[ $index -gt 999 ]];

# then

# tput cup $top 0

# for ((j=0; j<$scroll_lines; j++))

# do

# echo -e "$EINS\033[32m\033[40m \033[0m";

# done

# tput cup $top 0

# let index=1

# echo -e " $index $EINS\033[30m\033[47mhttp:$i\033[0m"

# fi

if [[ $index -lt 10 ]];

then

echo -e " $index $EINS\033[30m\033[47mhttp:$i\033[0m"

elif [[ $index -lt 100 && $index -gt 9 ]];

then

echo -e " $index $EINS\033[30m\033[47mhttp:$i\033[0m"

elif [[ $index -lt 1000 && $index -gt 99 ]];

then

echo -e " $index $EINS\033[30m\033[47mhttp:$i\033[0m"

elif [[ $index -gt 999 ]];

then

tput cup $top 0

for ((j=0; j<$scroll_lines; j++))

do

echo -e "$EINS\033[32m\033[40m \033[0m";

done

tput cup $top 0

echo -ne "\033[s"

let index=1

echo -e " $index $EINS\033[30m\033[47mhttp:$i\033[0m"

fi

#DOWNLOADING HERE

color=1

size=0

download_check=`cat ./4chan_download.log | grep $filename | wc -l`

if [[ $download_check -eq 0 ]];

then

let color=1

wget -q --limit-rate=200k -P ./$today http:$i

size=`ls -hls 24.09.12/$filename | cut -d " " -f 6`

#ls -hls 24.09.12/$filename | cut -d " " -f 5

let download_counter=$download_counter+1

else

let color=2

fi

echo -ne "\033[u"

if [[ $index -lt 10 ]];

then

echo -en " $index $EINS\033[m\033[40mhttp:$i\033[0m"

if [[ $color -eq 1 ]];

then

echo -e "\t[$EINS\033[32m\033[40m+\033[0m]"

else

echo -e "\t[$EINS\033[33m\033[40m*\033[0m]"

fi

elif [[ $index -lt 100 && $index -gt 9 ]];

then

echo -en " $index $EINS\033[m\033[40mhttp:$i\033[0m"

if [[ $color -eq 1 ]];

then

echo -e "\t[$EINS\033[32m\033[40m+\033[0m]"

else

echo -e "\t[$EINS\033[33m\033[40m*\033[0m]"

fi

elif [[ $index -lt 1000 && $index -gt 99 ]];

then

echo -en " $index $EINS\033[m\033[40mhttp:$i\033[0m"

if [[ $color -eq 1 ]];

then

echo -e "\t[$EINS\033[32m\033[40m+\033[0m]"

else

echo -e "\t[$EINS\033[33m\033[40m*\033[0m]"

fi

fi

let index=$index+1

echo -ne "\033[s";

#draw_bottom_box;

tput cup $top_box_t $(($top_box_l+20));

echo -en "$EINS\033[30m\033[47mDOWNLOADED $download_counter/$allfiles\033[0m";

echo -ne "\033[u";

done

}

scrap_images()

{

tput cup $(($top_box_t+5)) $(($top_box_l+1));

echo -en "$EINS\033[1;30m\033[40mSCRAPING IMAGES\033[0m";

tput cup $(($top_box_t+5)) $(($top_box_l+20));

echo -en "$EINS\033[1;30m\033[40m[\033[0m";

tput cup $(($top_box_t+5)) $(($top_box_l+36));

echo -en "$EINS\033[1;30m\033[40m]\033[0m";

urls=`cat uniquelinks.4chan | wc -l`

index=0;

position=21

for i in `cat uniquelinks.4chan`;

do

let index=$index+1

tput cup $top_box_t $(($top_box_l+20));

echo -en "$EINS\033[30m\033[47mSCRAPED $index/$urls\033[0m";

#HERE GOES THE CODE FOR images/# SCRAPPING

let left=$position

tput cup $(($top_box_t+5)) $(($top_box_l+$left));

echo -en "$EINS\033[1;30m\033[40m-\033[0m";

curl -s --limit-rate 10000B http://boards.4chan.org/hr/$i | grep -o '<a .*href=.*>' | sed -e 's/<a /\n<a /g' | sed -e 's/<a .*href=['"'"'"]//' -e 's/["'"'"'].*$//' -e '/^$/ d' | grep "images" | uniq >> images.4chan

let position=$position+1

if [[ $position -eq 36 ]];

then

tput cup $(($top_box_t+5)) $(($top_box_l+36));

echo -en "$EINS\033[1;30m\033[40m]\033[0m";

let position=21;

tput cup $(($top_box_t+5)) $((1+$position));

echo -en "$EINS\033[1;30m\033[40m \033[0m";

fi

done

#CLEAN PROGRESS BAR

for i in {1..14};

do

let left=$((19+$i))

tput cup $(($top_box_t+5)) $(($top_box_l+$left));

echo -en "$EINS\033[1;30m\033[40m \033[0m";

done

#MARK AS COMPLETE

tput cup $(($top_box_t+5)) $(($top_box_l+20+14));

echo -en "$EINS\033[1;30m\033[40m[\033[0m";

echo -en "$EINS\033[32m\033[40m+\033[0m";

echo -en "$EINS\033[1;30m\033[40m]\033[0m";

#CLEAN COUNTER

tput cup $top_box_t $(($top_box_l+20));

echo -en "$EINS\033[30m\033[47m \033[0m";

}

scrap_links()

{

if [[ -e links.4chan ]];

then

rm links.4chan;

fi

tput cup $(($top_box_t+4)) $(($top_box_l+1));

echo -en "$EINS\033[1;30m\033[40mSCRAPING LINKS\033[0m";

tput cup $(($top_box_t+4)) $(($top_box_l+20));

echo -en "$EINS\033[1;30m\033[40m[\033[0m";

tput cup $(($top_box_t+4)) $(($top_box_l+36));

echo -en "$EINS\033[1;30m\033[40m]\033[0m";

#CLEAN OUTPUT FILE

if [[ -e links.4chan ]]; then rm links.4chan; fi

#SCRAP THE FIST PAGE

curl -s --limit-rate $curltransfer http://boards.4chan.org/hr/ | grep -o '<a .*href=.*>' | sed -e 's/<a /\n<a /g' | sed -e 's/<a .*href=['"'"'"]//' -e 's/["'"'"'].*$//' -e '/^$/ d' | grep "res/" | sort -u >> links.4chan

#SCRAP REST

for i in {1..15};

do

let left=$((20+$i))

tput cup $(($top_box_t+4)) $(($top_box_l+$left));

echo -en "$EINS\033[1;30m\033[40m-\033[0m";

curl -s --limit-rate $curltransfer http://boards.4chan.org/hr/$i | grep -o '<a .*href=.*>' | sed -e 's/<a /\n<a /g' | sed -e 's/<a .*href=['"'"'"]//' -e 's/["'"'"'].*$//' -e '/^$/ d' | grep "res/" | sort -u >> links.4chan

done

#CLEAN PROGRESS BAR

for i in {1..14};

do

let left=$((19+$i))

tput cup $(($top_box_t+4)) $(($top_box_l+$left));

echo -en "$EINS\033[1;30m\033[40m \033[0m";

done

#MARK AS COMPLETE

tput cup $(($top_box_t+4)) $(($top_box_l+20+14));

echo -en "$EINS\033[1;30m\033[40m[\033[0m";

echo -en "$EINS\033[32m\033[40m+\033[0m";

echo -en "$EINS\033[1;30m\033[40m]\033[0m";

}

function draw_top_box()

{

for (( i=0; i<$top_box_width; i++ ))

do

let left=$top_box_l+$i;

tput cup $top_box_t $left;

echo -en "$EINS\033[30m\033[47m \033[0m";

done

tput cup $top_box_t $(($top_box_l+2));

echo -en "$EINS\033[30m\033[47mDWM v.1.0\033[0m";

tput cup $top_box_t $(($cols-20));

echo -en "$EINS\033[30m\033[47m$time | $today\033[0m";

tput cup $lines $cups;

}

function draw_bottom_box()

{

tput cup $lines $cups;

for (( i=0; i<$top_box_width; i++ ))

do

let left=$top_box_l+$i;

tput cup $cols $left;

echo -en "$EINS\033[30m\033[47m \033[0m";

done

tput cup $lines $cups;

echo -en "$EINS\033[30m\033[47m DOWNLOADED FILES: $download_counter\033[0m";

}

function draw_panel()

{

for (( i=0; i<$top_box_width; i++ ))

do

let left=$top_box_l+$i;

tput cup $(($top_box_t+1)) $left;

echo -en "$EINS\033[1;30m\033[40m-\033[0m";

done

for (( i=0; i<$top_box_width; i++ ))

do

let left=$top_box_l+$i;

tput cup $(($top_box_t+3)) $left;

echo -en "$EINS\033[1;30m\033[40m-\033[0m";

done

tput cup $(($top_box_t+2)) $top_box_l;

echo -en "$EINS\033[1;30m\033[40m|\033[0m";

tput cup $(($top_box_t+2)) $top_box_r;

echo -en "$EINS\033[1;30m\033[40m|\033[0m";

tput cup $(($top_box_t+2)) $(($top_box_l+4));

echo -en "$EINS\033[1;30m\033[40m|\033[0m";

tput cup $(($top_box_t+1)) $top_box_l;

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+1)) $top_box_r;

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+3)) $top_box_l;

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+3)) $top_box_r;

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+3)) $(($top_box_l+4));

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+1)) $(($top_box_l+4));

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+1)) $(($top_box_r-5));

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+3)) $(($top_box_r-5));

echo -en "$EINS\033[1;30m\033[40m+\033[0m";

tput cup $(($top_box_t+2)) $(($top_box_r-5));

echo -en "$EINS\033[1;30m\033[40m|\033[0m";

}

Be aware that all scripts are run at your own risk and while every script has been written with the intention of minimising the potential for unintended consequences, the owners, hosting providers and contributors cannot be held responsible for any misuse or script problems.